A data exchange platform such as IUDX or ADeX curates datasets from various vendors based on how reliable, relevant and accurate the datasets are. A byproduct of this process is that the data that’s hosted on the platform ends up being quite valuable. One of the ways to realise this value is to build a marketplace. This encourages vendors to produce data of good quality. Let’s look at the technical aspects of building such a marketplace.

When it comes to designing a marketplace the primary sources of inspirations are the system architectures of Amazon.com or Flipkart as they cater to the definition of a marketplace. Although, there are a few key differences between marketplaces that host physical products versus those that host data, or a collection of data(dataset henceforth), as products. One of the major differences being that purchasing a physical product means to have complete ownership of said product, i.e., buying a complete unit. Whereas purchasing data as a ‘product’ could have different meanings. For instance, access to data can be temporal where the purchasing units can be in days or months. Another way to quantify access is in bytes of data consumed. Another key difference between the two is that sharing of data could be violating the purchasing policy contrary to a physical product which can be shared or even re-sold.

But what is a marketplace afterall? “A marketplace or market place is a location where people regularly gather for the purchase and sale of provisions, livestock, and other goods.” – Wikipedia

A marketplace is a catalogue of products hosted by various vendors(providers henceforth) to be discovered by buyers(consumers henceforth). A user should be able to create inventory of products, search for products on the storefront and make payment to purchase a product. They should also be able to view their past purchases; in the case of a provider they should be able define their products, the variants available for it and, to identify who brought their products.

Note : In the case of a data marketplace the product could be a dataset or a combination of datasets bundled by the provider. Additionally the purchase of a product implies that the consumer is getting consent from the provider to access the dataset(s) of the product. The consumer is NOT getting the ownership of the data.

For example, let us assume that the bus transit data of a city is provided by its municipal corporation. A consumer, such as a company that aims to provide analytics on this, would purchase the right to access data from the marketplace. This would allow them to go get a token data exchange’s authorization server which ultimately will be used to get the intended data. Using this data the consumer will be expected to perform intended operations but will be expected, rather bound, to not share the raw data with anyone. However this binding will be outside the scope of the marketplace or the data exchange platforms. Organisations providing the data will be expected to implement protocols to ensure right of ownership is maintained (such as the digital rights management, i.e. DRM, protocol for video streams).

Now that we are clear with the technical requirements, we can start designing the system. To keep things simple let’s divide the flows into a consumer view and a provider view.

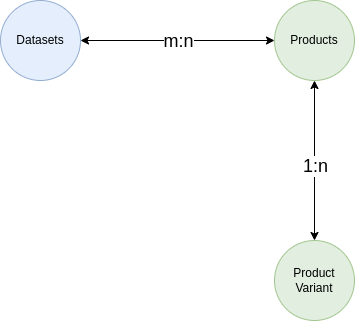

A provider should be able to create a product, delete a product they own and list the purchases made against them. Also a product can have multiple variants (eg. A shirt listed on amazon can come in various colours and sizes) so the provider should be able to create, update and delete variants within each product they host.

Things to note here:

- We need to ensure that only the provider of the datasets should be allowed to host products

- A dataset can be part of different products

- A product variant would need to be mapped to an existing product

To put things into perspective assume that a city municipality has various air quality sensor datasets from different suburbs. They can host these datasets as a product, with a great many variants based on the time of access, amount of data access, type of data access and other parameters. One variant could be with access for 10 days, with a 10GB limit and only with 100 API calls. Another possibility is that the access can be 100 GB but only of archive files.

The same city municipality can host another product with air quality sensor datasets and traffic datasets combined, with its own variants.

A consumer should be able to discover products either by searching by its name, or a dataset name or provider name. As an added UX feature the marketplace should list popular products and datasets for the Consumer landing page. The consumer ultimately should be able to add products to a cart, purchase the items in the cart. Another thing to consider is refunds, which is out of the scope of this discussion for now.

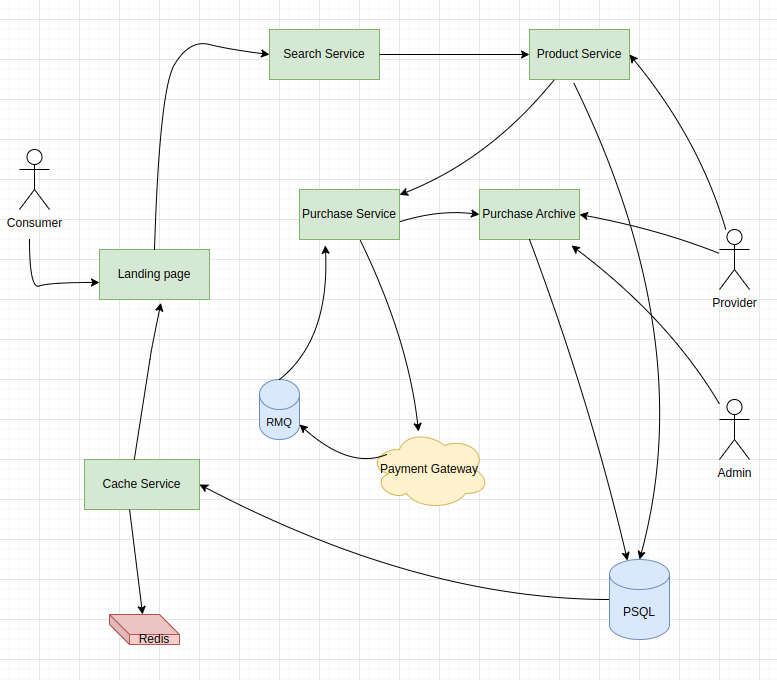

With the design in place we can start to think of data stores, communication channels, payment gateways and so on. Firstly, we can use a relational data store such as PostgreSQL(PSQL henceforth) or MySQL to store the product and dataset information as in this particular case the structure of the objects is going to be consistent. We can use the same data store as above for the purchase information and payment history as the ACID properties of a relational DB come in handy for it. To handle the popular datasets/products store, a cache layer such as Redis can be introduced.

Note that we need to make sure that the purchase flow is robust and the system is decoupled from any failure on the payment gateway. A cart and order service can be introduced which interacts with the gateway. When an order is placed, a payment is initiated there can be two scenarios.

- One, the payment succeeds and the order completes. This should trigger the creation of an invoice and clearing of the cart

- Two, the payment fails and the order is pending. This may trigger retry on the gateway or create an invoice indicating failure of purchase upon which payment can be manually retried.

In both situations the state of a user session has to be stored.

Also we need to set up an admin flow to verify the purchase of a product(equivalent to the consumer receiving consent to access the datasets in the product, constraint to the variant specifications). Note that the admin here is the DX platform’s authorization server. The verification will be used to issue tokens when data access is requested. A policy is written for a consumer against all the dataset purchased which acts as the source of truth for issuing tokens. This can be achieved by storing a successful purchase in a message outbox, such as RabbitMQ (RMQ henceforth), to which the authorization server can subscribe to.

Fig 2. shows the complete architecture with all the different players interacting with it. For simplicity the Product variant service has been abstracted into the product service itself.

With a basic design set up the system a few open questions prompt up. Will the marketplace platform be an aggregator of funds flowing in the system (like Amazon.in) or an offsite payment that goes directly to the vendor’s account (like shopify)? Will the marketplace be able to handle multiple invoices on a single order? Will it be able to provide digital rights on the data hosted? How will the system scale when the number of users increases significantly?

Any software system is not designed to be completely optimised, highly scalable from the beginning. This is an incremental approach, the system should scale only when required as the cost of over optimization will be very huge compared to the footfall. Although the design should have contingencies in place if and when it happens.

Author:

Pranav Doshetty

Senior Software Engineer

Related Posts

- IUDX

- September 23, 2021

Report: Non-Personal Data: Policy, Economics and Technology

In response to the Expert Committee Report proposing a Non-Personal Data (NPD) Governance Frame ..

- IUDX

- August 31, 2022

IUDX for e-Governance

One of the fundamental responsibilities of the government is to provide services and benefits t ..

- IUDX

- September 1, 2020

Real World Analogies of IUDX

Why is IUDX needed? What does it do for cities? Sometimes it is easier to understand a concept ..